Apple New Child Safety Features

Historically, Apple has been at the forefront of fighting for the prevalence of privacy and user freedom, especially under constitutional guidelines. More recently, though, the tech legend has come under fire for its new privacy features related to Child Sexual Abuse Material (CSAM).

This isn’t the first time that Apple’s decisions regarding user privacy have been questioned or criticised. Siri by Apple, a smart voice assistant, has also drawn criticism from people alleging it of privacy invasion and discreet surveillance.

Accusations of exploiting encryptions, using surveillance backdoors, and selling private user data to third-party apps have surfaced in the past, too. On the other hand, Apple has categorically denied all such rumours and remains committed to developing its recent child protection features.

What Has Apple Announced?

In one of its routine announcements regarding software updates and integrations last Thursday, Apple has revealed three major steps it will take to curb child sexual abuse through digital assistance. These features include:

1. iMessage Surveillance

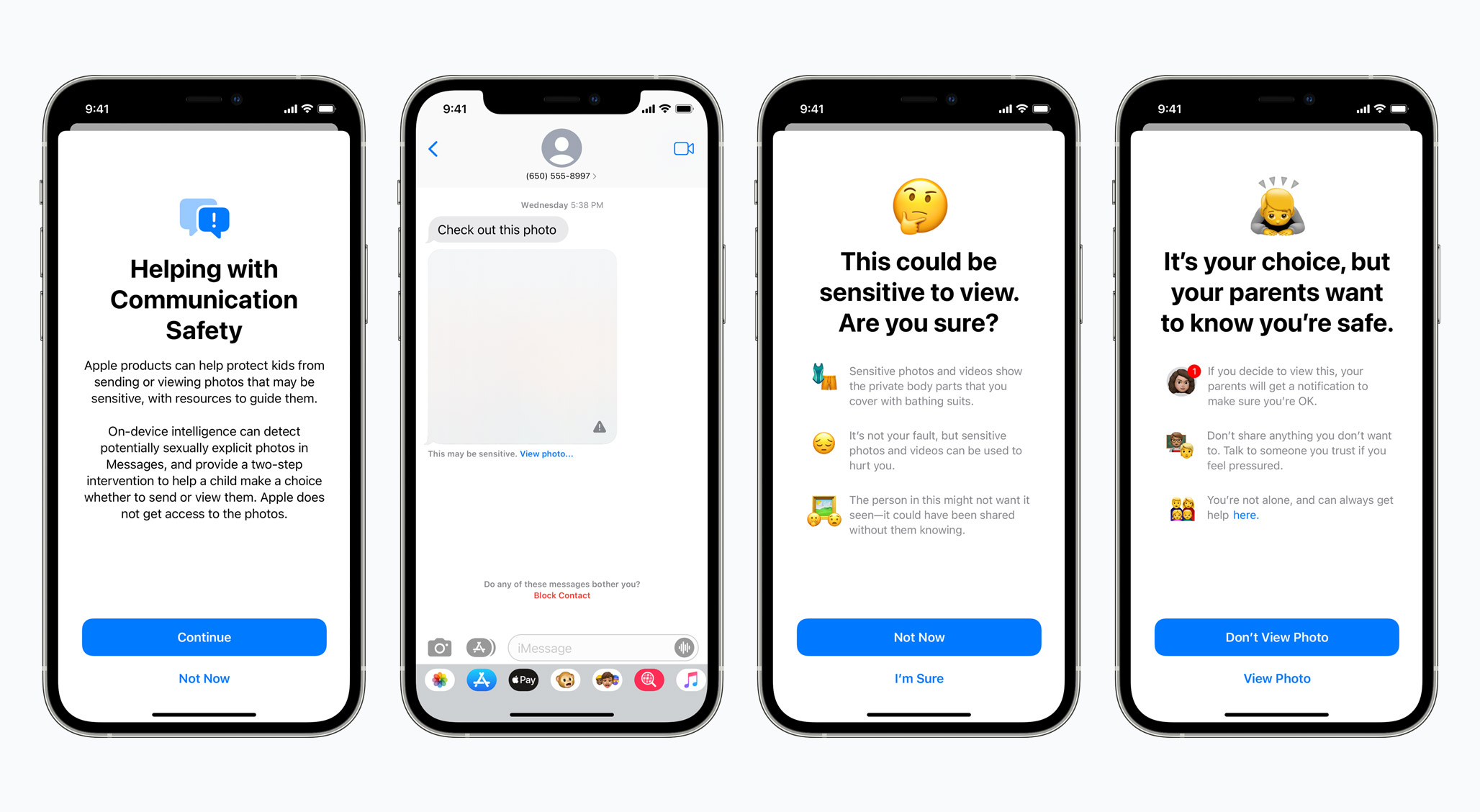

The first step Apple will take is onboarding parents on their child’s text message applications. This is to catch any sexual activity deemed illegal or unsafe for minors, which primarily means sending or receiving nude, semi-nude, or animated nude graphics.

Here’s how the feature will work: A minor receives a multimedia message from someone they may or may not know, or they send a multimedia message that is sexual in nature to someone they may or may not know, either by will or by force.

Apple’s on-device machine learning protocol, the NeuralHash, will break down the image into small hashes and decrypt each hash to check if it contains explicit content.

If there’s an alarming number of hashes that indicate sexual content, the feature will be activated. If someone else has sent the media, Apple will warn of danger and ask the child if they’re sure they want to view the picture.

If the child still chooses to view it, Apple will send an alert to the parents, though they won’t be able to see the message for themselves. If the minor is the one sending sexual content, the parents will again be notified.

However, the entire detection will take place on devices that too in encrypted form, and nothing privacy-threatening will reach Apple’s headquarters. The feature will be set up for family accounts on iCloud for iOS 15, iPadOS 15, and macOS Monterey.

2. Search and Siri Optimisation

Another feature that Apple will roll out for iOS 15, iPadOS 15, watchOS 8, and macOS Monterey is to make search results related to child abuse productive, helpful, and insightful. For instance, if a user searches for guidance on filing a child sexual abuse report, the apps will elaborately explain the exact resources and methods to file a complaint and seek help.

Similarly, search queries that indicate an interest in child sexual abuse topics will also be monitored. Apple will take help from partner companies and platforms to intervene and portray child abuse as problematic and harmful.

The purpose here seems to be awareness and surveillance of sexual abuse within Apple’s user base. To achieve this purpose, Apple is planning to optimise Siri and Search apps to make them more productive and responsive in the matter.

3. CSAM Detection

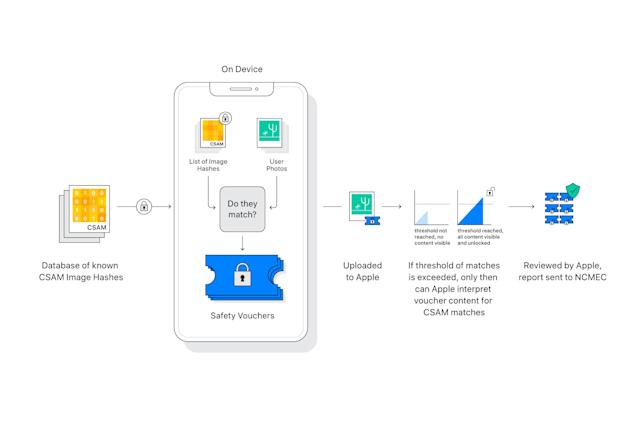

The third feature in this announcement is CSAM Detection. By detecting child sexual abuse material stored on a user’s phone, Apple will be able to assist the National Center for Missing and Exploited Children (NCMEC).

NCMEC will collaborate with Apple by providing cryptographic hashes that characterise minor sexual abuse. Similar to the first feature, CSAM detection will also take place through on-device screening and hashing.

Using a threshold that gives an accuracy of one-in-a-trillion mismatches, Apple will determine which photo or video might contain CSAM.

Once the threshold is crossed, that account will be flagged and reported to the NCMEC by Apple. If a user then feels like they’ve been falsely flagged, they can contact Apple’s help centre to get their account restored to its previous status.

Conclusion

Should you or should you not support Apple’s new features? That’s entirely up to you. While child protection is a major need of our day, privacy concerns are also rooted firmly in their own pace. For now, all we can do is observe how Apple plans to proceed with these new features.

References

- https://www.apple.com/child-safety/

- https://www.theverge.com/platform/amp/2021/8/10/22613225/apple-csam-scanning-messages-child-safety-features-privacy-controversy-explained

Written by The Original PC Doctor on 17/08/2021.